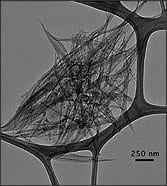

Computers that function like the human brain could soon become a reality thanks to new research using optical fibres made of speciality glass. The research, published in Advanced Optical Mat... Read more

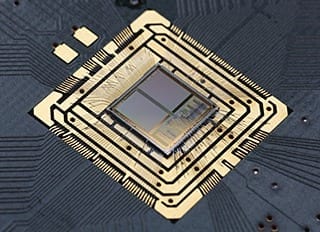

IBM plans to spend $3 billion over the next five years on research and development for computer chips, both to stretch the limits of conventional semiconductors and to hasten the commerciali... Read more

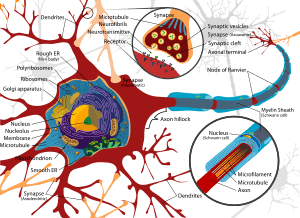

Inspired by nature, scientists from Berlin and Heidelberg use artificial nerve cells to classify different types of data. A bakery assistant who takes the bread from the shelf just to give i... Read more

Computer chips inspired by human neurons can do more with less power Kwabena Boahen got his first computer in 1982, when he was a teenager living in Accra. “It was a really cool device,” he... Read more

Novel microchips imitate the brain’s information processing in real time. Neuroinformatics researchers from the University of Zurich and ETH Zurich together with colleagues from the EU and U... Read more

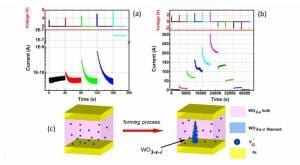

Researchers in Japan and the US propose a nanoionic device with a range of neuromorphic and electrical multifunctions that may allow the fabrication of on-demand configurable circuits, analo... Read more