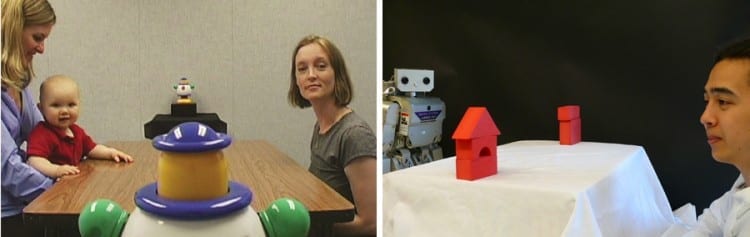

Babies learn about the world by exploring how their bodies move in space, grabbing toys, pushing things off tables and by watching and imitating what adults are doing. But when roboticists w... Read more

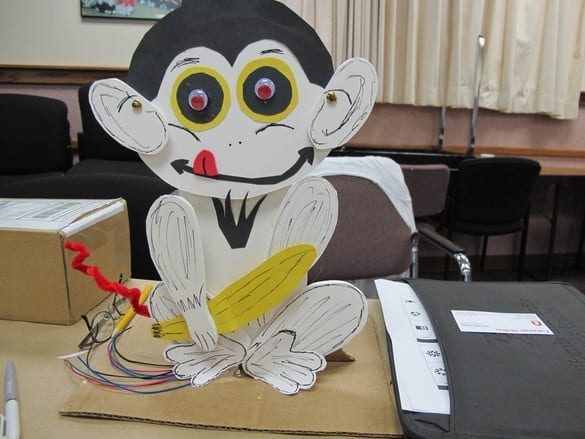

Helps teach and inspire anyone who didn’t think they wanted to make robots or learn about programming Though it turns out the kit–which shows that engineering can be a creative and art... Read more

Until someone develops a common platform for building robots (think of the combination of Windows and Intel that has made PCs so accessible), the technology will remain elusive to the genera... Read more