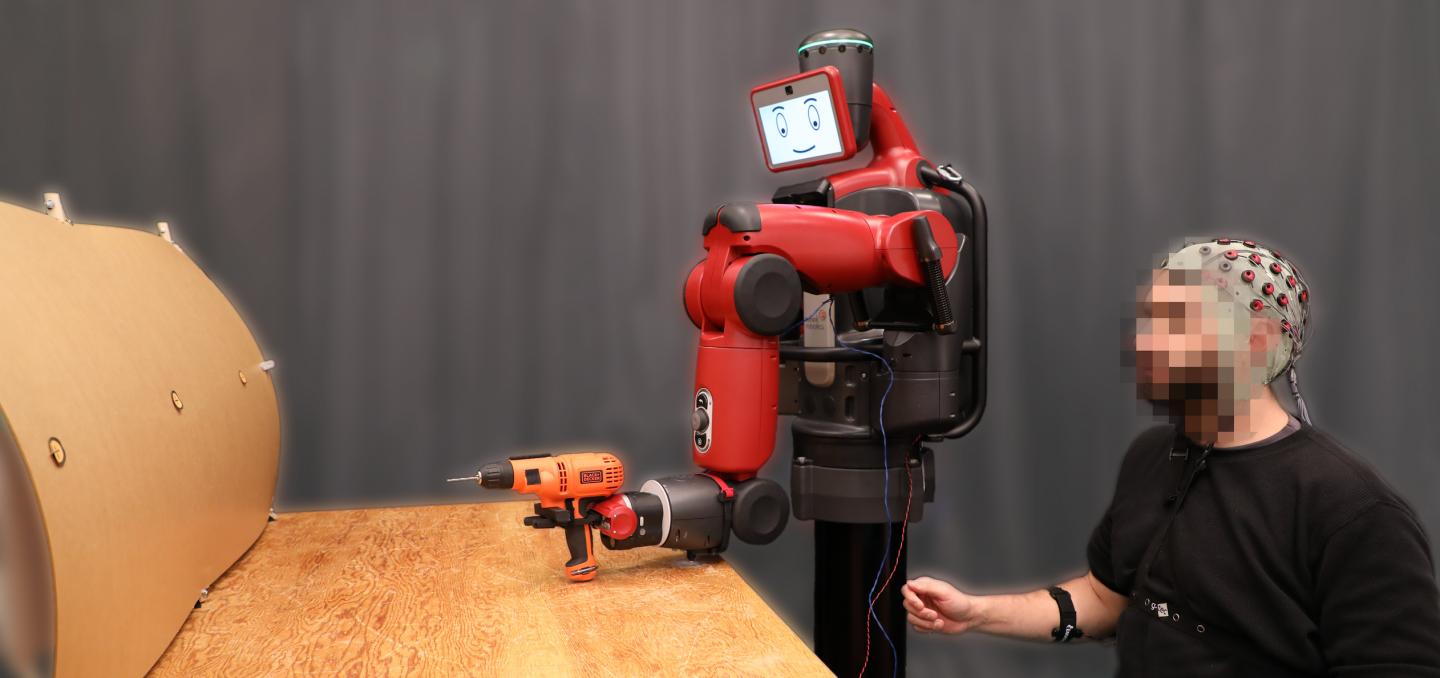

System enables people to correct robot mistakes on multi-choice problems Getting robots to do things isn’t easy: usually scientists have to either explicitly program them or get them t... Read more

A major step on the road to developing robotic exoskeletons Monkeys have been trained to control a virtual arm on a computer screen using only their brain waves. Scientists say the animals w... Read more

Brain-Computer Interfacing (BCI) is a hot area of research. In the past year alone we’ve looked at a system to allow people to control a robotic arm and another that enables users to control... Read more

Image by John Swords via Flickr Japan’s BSI-TOYOTA Collaboration Center has successfully developed a system that controls a wheelchair using brain waves in as little as 125 millisecond... Read more

Using the same technology that allowed them to accurately detect the brain signals controlling arm movements that we looked at last year, researchers at the University of Utah have gone one... Read more

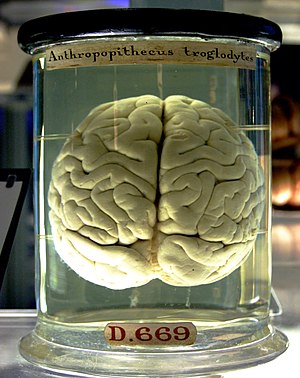

Image via Wikipedia New research holds promise for a noninvasive brain-computer interface that allows mental control over computers and prosthetics Our bodies are wired to move, and damaged... Read more

If you’re attending the Winter Olympic Games in Vancouver, British Columbia this month, you’ll have the chance to transmit your brain waves across Canada. When they reach their destination,... Read more