New therapies are on the horizon for individuals paralyzed following spinal cord injury.

The e-Dura implant developed by EPFL scientists can be applied directly to the spinal cord without causing damage and inflammation. The device is described in an article appearing online January 8, 2015, in Science.

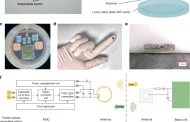

EPFL scientists have managed to get rats walking on their own again using a combination of electrical and chemical stimulation. But applying this method to humans would require multifunctional implants that could be installed for long periods of time on the spinal cord without causing any tissue damage. This is precisely what the teams of professors Stéphanie Lacour and Grégoire Courtine have developed. Their e-Dura implant is designed specifically for implantation on the surface of the brain or spinal cord. The small device closely imitates the mechanical properties of living tissue, and can simultaneously deliver electric impulses and pharmacological substances. The risks of rejection and/or damage to the spinal cord have been drastically reduced. An article about the implant will appear in early January in Science Magazine.

So-called “surface implants” have reached a roadblock; they cannot be applied long term to the spinal cord or brain, beneath the nervous system’s protective envelope, otherwise known as the “dura mater,” because when nerve tissues move or stretch, they rub against these rigid devices. After a while, this repeated friction causes inflammation, scar tissue buildup, and rejection.

An easy-does-it implant

Flexible and stretchy, the implant developed at EPFL is placed beneath the dura mater, directly onto the spinal cord. Its elasticity and its potential for deformation are almost identical to the living tissue surrounding it. This reduces friction and inflammation to a minimum. When implanted into rats, the e-Dura prototype caused neither damage nor rejection, even after two months. More rigid traditional implants would have caused significant nerve tissue damage during this period of time.

The researchers tested the device prototype by applying their rehabilitation protocol — which combines electrical and chemical stimulation – to paralyzed rats. Not only did the implant prove its biocompatibility, but it also did its job perfectly, allowing the rats to regain the ability to walk on their own again after a few weeks of training.

“Our e-Dura implant can remain for a long period of time on the spinal cord or the cortex, precisely because it has the same mechanical properties as the dura mater itself. This opens up new therapeutic possibilities for patients suffering from neurological trauma or disorders, particularly individuals who have become paralyzed following spinal cord injury,” explains Lacour, co-author of the paper, and holder of EPFL’s Bertarelli Chair in Neuroprosthetic Technology.

Read more: Neuroprosthetics for paralysis: an new implant on the spinal cord

The Latest on: Neuroprosthetics

[google_news title=”” keyword=”Neuroprosthetics” num_posts=”10″ blurb_length=”0″ show_thumb=”left”]

via Google News

The Latest on: Neuroprosthetics

- Neuroprosthetics Market To Hit USD 30.26 Billion By 2031, Says SNS Insideron April 17, 2024 at 8:46 pm

The neuroprosthetics market is revolutionizing healthcare by offering life-altering solutions for individuals with neurological disorders, nerve injuries, and sensory impairments. These devices ...

- Neuroprosthetics Market to Hit USD 30.26 billion by 2031, says SNS Insideron April 16, 2024 at 5:01 pm

According to SNS Insider, the Neuroprosthetics Market was valued at USD 11.71 Billion in 2023 and is projected to reach USD 30.26 Billion by 2031. The neuroprosthetics market is revolutionizing ...

- Optogenetics Illuminates Cerebellum's Role in Neuroprostheticson April 15, 2024 at 9:24 am

Reviewed by Lexie Corner The field of neuroprosthetics, which enables the brain to operate external devices like robotic limbs, is starting to gain traction as a potential treatment option for ...

- Boosting the brain's control of prosthetic devices by tapping the cerebellumon April 15, 2024 at 8:41 am

Neuroprosthetics, a technology that allows the brain to control external devices such as robotic limbs, is beginning to emerge as a viable option for patients disabled by amputation or neurological ...

- Mapping The Mind: Advances In Understanding Speech Productionon April 8, 2024 at 9:45 am

A more robust understanding of the human brain could improve techniques for diagnosing and treating neurological disorders and pave the way for more advanced neuroprosthetics to assist individuals ...

- Neuroscience Breakthrough: How Our Brains Sense Body Position And Movementon April 4, 2024 at 8:01 am

Moreover, this research opens up new avenues for advancements in neuroprosthetics, potentially leading to more natural and intuitive control of artificial limbs. Overall, the study marks a ...

- How the brain senses body position and movementon April 1, 2024 at 5:00 pm

The research also paves the way for new experimental avenues in neuroscience, since a better understanding of proprioceptive processing could lead to significant advancements in neuroprosthetics ...

- Neuroprosthetics Market to Reach $26.12 Billion by 2030, Says Coherent Market Insightson March 10, 2024 at 5:01 pm

Neuroprosthetics Market is anticipated to witness a CAGR of 10.9% during the forecast period 2023-2030, owing to the growing prevalence of neurological disorders and advancements in technology ...

- Sensory bionic limbs: a success story for neuroprostheticson August 9, 2022 at 5:00 pm

Giacomo Valle is a postdoctoral researcher at ETH Zurich (Switzerland), working in the field of neuroengineering and neuroprosthetics. His research focuses on the development of neurotechnology that ...

- Adding A Gentle Touch To Prosthetic Limbs With Somatosensory Stimulationon June 30, 2021 at 4:29 am

Neuroprosthetics such as those being prototyped by Nathan Copeland are cybernetic neural implants which seek to restore functionality that was lost due to disease or an accident. Combining ...

via Bing News